With less than 3 months for the retirement of Python 2.7 and with the much required QUADS migration to Python 3, we could not miss the opportunity to try out the now native asyncio framework and identify workflows where we could speed things up.

With less than 3 months for the retirement of Python 2.7 and with the much required QUADS migration to Python 3, we could not miss the opportunity to try out the now native asyncio framework and identify workflows where we could speed things up.

What is asyncio and how does it make my code faster without multi-threading?

See what I did there?

I took the liberty to make some assumptions to illustrate this.

First and foremost it makes my code faster. If one thinks of asynchronous communication like now a days phone calls vs synchronous communication like radio transmissions, then we can assume async will refer to a significant efficiency improvement.

Given this example, let’s not forget the io in asyncio, which directly refers to Input/Output operations, hence if your code does not have any IO blocking operations like reading a file, querying a remote DB, making a request to a REST API, etc, but still are looking for some speed up on your code, you might want to consider some threading .

without multi-threading refers to the fact that concurrency is not multi-threading (although it is possible to run separate asyncio instances in separate threads via the `threading` library). Within asyncio there is the concept of an event loop which is fed with multiple tasks to execute.

This loop runs until all tasks are completed and executes every instruction until an await-able co-routine, such as an API call, is executed, time when the loop moves the execution of the thread to the next task in queue while this previous task awaits for a result.

The loop keeps executing instructions on the next task until the response from the previous API call, is then when the loop returns to the execution of the previous tasks and so forth.

With this in place we can now execute a bunch of heavy IO tasks asynchronously and use the dead time between IO calls to continue executing tasks instead of blocking the execution.

The Elephant in the Lab

With the growing size of our infrastructure, QUADS responsibility of taking out even bigger assignments, has been proving difficult to succeed in time and manner. Added to this, running one process for sequentially and synchronously provisioning more than 500 servers is going to be without a doubt time-consuming, as well as not very scalable.

Identifying where we could make use of asyncio within QUADS was an easy task. The first obvious call was the re provisioning step.

More specifically quads --move-hosts and further into move_and_rebuild_hosts.py (M&R here on).

Now, the fact that this task was the most demanding does not directly imply that is the best for us to implement asyncio in.

How much I/O is in QUADS Reprovisioning?

M&R (move and rebuild) heavily relies in mostly 2 services for the entire workflow, such are Redfish API for OOB management and Foreman for re provisioning.

Illustrated here is a sequence diagram for the historical workflow of M&R:

Now multiply this by 500.

As you can see on the diagram, we got a couple bottlenecks that can be worked upon with the use of asyncio.

In this case the workflow after asyncio implementation would look something like this:

In order to make this possible we had to fully migrate Quads Foreman API wrapper and Badfish from using requests to use aiohttp. All methods inside these classes converted to asyncio co-routines to be awaited from inside M&R.

Foreman DoS (or so we thought…)

We now have a fully functional, asynchronous, blazing fast architecture but Foreman was happier without multi-tasking.

So we now have:

async with aiohttp.ClientSession(

loop=self.loop

) as session:

async with session.get(

self.url + endpoint,

auth=BasicAuth(self.username, self.password),

verify_ssl=False,

) as response:

result = await response.json(content_type="application/json")

With this in place and the highest hopes, we ventured into making a big (150 hosts/network) assignment roll out and to our (NON) surprise we find a couple of tracebacks in the execution and a couple of failed host moves that required a re-run.

2019-09-19 15:30:39,983 There was something wrong with your request.

Traceback (most recent call last):

File "/usr/lib64/python3.7/site-packages/aiohttp/connector.py", line 822, in _wrap_create_connection

return await self._loop.create_connection(*args, **kwargs)

File "/usr/lib64/python3.7/asyncio/base_events.py", line 981, in create_connection

ssl_handshake_timeout=ssl_handshake_timeout)

File "/usr/lib64/python3.7/asyncio/base_events.py", line 1009, in _create_connection_transport

await waiter

ConnectionResetError

The first guess here was, Foreman is not able to handle a handful of requests. Let’s bring in the semaphores.

We want to limit the amount of concurrent calls to 20 so we wrap all Foreman calls with the semaphore context manager like:

semaphore = asyncio.Semaphore(20)

async with semaphore:

async with aiohttp.ClientSession(

loop=self.loop

) as session:

async with session.get(

self.url + endpoint,

auth=BasicAuth(self.username, self.password),

verify_ssl=False,

) as response:

result = await response.json(content_type="application/json")

So lets do some mod_passenger Apache and sysctl tuning.

$ vim /etc/httpd/conf.d/05-foreman-ssl.conf

KeepAliveTimeout 75

MaxKeepAliveRequests 2000

$ vim /etc/sysctl.conf

net.core.rmem_max = 8388608

net.core.wmem_max = 8388608

net.core.rmem_default = 65536

net.core.wmem_default = 65536

We now go ahead with another assignment and realize the effort was futile, issue persists.

We now go deeper into Foreman logs and enable debugging in a couple places.

$ vim /etc/foreman/foreman-debug.conf

# Debug mode (0 or 1)

DEBUG=1

$ vim /etc/foreman-proxy/settings.yml

:log_level: DEBUG

$ service foreman-proxy restart

$ vim /etc/foreman/settings.yaml

:logging:

:level: debug

$ touch ~foreman/tmp/restart.txt

We start the Quads engines and we see a traceback on the foreman logs. Turns out this appears to be a bug on the Foreman side not allowing us to update a user in a non-atomic fashion, say concurrently. We filed an issue with the Foreman team and have a hotfix in place to address this till resolved. The hotfix consists of removing the semaphores for all get requests and set all post and put requests on a one-dimensional semaphore queue.

Benchmarks

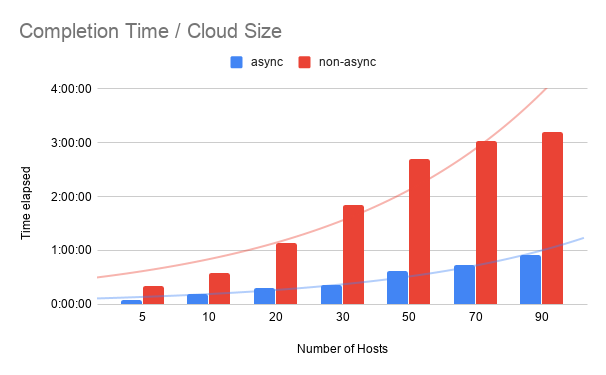

We now have a seemingly stable async process for provisioning and here are some of the results:

Conclusions

Asyncio has helped us reduce our provisioning times by a factor of 3 in a 90 hosts assignments and in theory this factor gets larger with larger assignments as more tasks can be run concurrently.

Provisioning (bare-metal systems/network/RBAC/IPMI automation) of all ~700 hosts on our infrastructure can now be done in about 6 hours when before it would have taken us not less than 18 hours.

QUADS lets us schedule this ahead of time in the future (typically on a Sunday) so our infrastructure is carved up, sliced-and-diced and sets of hardware handed out to various Engineering teams automatically.